A load-balancer in an infrastructure

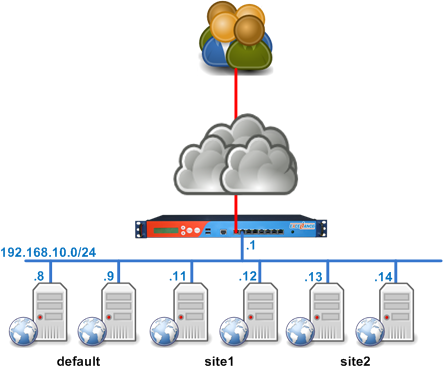

The picture below shows how we usually install a load-balancer in an infrastructure:

This is a logical diagram. When working at layer 7 (aka Application layer), the load-balancer acts as a reverse proxy.

So, from a physical point of view, it can be plugged anywhere in the architecture:

- in a DMZ

- in the server LAN

- as front of the servers, acting as the default gateway

- far away in an other separated datacenter

Why does load-balancing web application is a problem????

Well, HTTP is not a connected protocol: it means that the session is totally independent from the TCP connections.

Even worst, an HTTP session can be spread over a few TCP connections…

When there is no load-balancer involved, there won’t be any issues at all, since the single application server will be aware the session information of all users, and whatever the number of client connections, they are all redirected to the unique server.

When using several application servers, then the problem occurs: what happens when a user is sending requests to a server which is not aware of its session?

The user will get back to the login page since the application server can’t access his session: he is considered as a new user.

To avoid this kind of problem, there are several ways:

- Use a clustered web application server where the session are available for all the servers

- Sharing user’s session information in a database or a file system on application servers

- Use IP level information to maintain affinity between a user and a server

- Use application layer information to maintain persistance between a user and a server

NOTE: you can mix different technc listed above.

Building a web application cluster

Only a few products on the market allow administrators to create a cluster (like Weblogic, tomcat, jboss, etc…).

I’ve never configured any of them, but from Administrators I talk too, it does not seem to be an easy task.

By the way, for Web applications, clustering does not mean scaling. Later, I’ll write an article explaining while even if you’re clustering, you still may need a load-balancer in front of your cluster to build a robust and scalable application.

Sharing user’s session in a database or a file system

This Technic applies to application servers which has no clustering features, or if you don’t want to enable cluster feature from.

It is pretty simple, you choose a way to share your session, usually a file system like NFS or CIFS, or a Database like MySql or SQLServer or a memcached then you configure each application server with proper parameters to share the sessions and to access them if required.

I’m not going to give any details on how to do it here, just google with proper keywords and you’ll get answers very quickly.

IP source affinity to server

An easy way to maintain affinity between a user and a server is to use user’s IP address: this is called Source IP affinity.

There are a lot of issues doing that and I’m not going to detail them right now (TODO++: an other article to write).

The only thing you have to know is that source IP affinity is the latest method to use when you want to “stick” a user to a server.

Well, it’s true that it will solve our issue as long as the user use a single IP address or he never change his IP address during the session.

Application layer persistence

Since a web application server has to identify each users individually, to avoid serving content from a user to an other one, we may use this information, or at least try to reproduce the same behavior in the load-balancer to maintain persistence between a user and a server.

The information we’ll use is the Session Cookie, either set by the load-balancer itself or using one set up by the application server.

What is the difference between Persistence and Affinity

Affinity: this is when we use an information from a layer below the application layer to maintain a client request to a single server

Persistence: this is when we use Application layer information to stick a client to a single server

sticky session: a sticky session is a session maintained by persistence

The main advantage of the persistence over affinity is that it’s much more accurate, but sometimes, Persistence is not doable, so we must rely on affinity.

Using persistence, we mean that we’re 100% sure that a user will get redirected to a single server.

Using affinity, we mean that the user may be redirected to the same server…

What is the interraction with load-balancing???

In load-balancer you can choose between several algorithms to pick up a server from a web farm to forward your client requests to.

Some algorithm are deterministic, which means they can use a client side information to choose the server and always send the owner of this information to the same server. This is where you usually do Affinity 😉 IE: “balance source”

Some algorithm are not deterministic, which means they choose the server based on internal information, whatever the client sent. This is where you don’t do any affinity nor persistence 🙂 IE: “balance roundrobin” or “balance leastconn”

I don’t want to go too deep in details here, this can be the purpose of a new article about load-balancing algorithms…

You may be wondering: “we have not yet speak about persistence in this chapter”. That’s right, let’s do it.

As we saw previously, persistence means that the server can be chosen based on application layer information.

This means that persistence is an other way to choose a server from a farm, as load-balancing algorithm does.

Actually, session persistence has precedence over load-balancing algorithm.

Let’s show this on a diagram:

Client request

|

V

HAProxy Frontend

|

V

backend choice

|

V

HAproxy Backend

|

V

Does the request contain

persistence information ---------

| |

| NO |

V |

Server choice by | YES

load-balancing algorithm |

| |

V |

Forwarding request <----------

to the server

Which means that when doing session persistence in a load balancer, the following happens:

- the first user’s request comes in without session persistence information

- the request bypass the session persistence server choice since it has no session persistence information

- the request pass through the load-balancing algorithm, where a server is chosen and affected to the client

- the server answers back, setting its own session information

- depending on its configuration, the load-balancer can either use this session information or setup its own before sending the response back to the client

- the client sends a second request, now with session information he learnt during the first request

- the load-balancer choose the server based on the client side information

- the request DOES NOT PASS THROUGH the load-balancing algorithm

- the server answers the request

and so on…

At HAProxy Technologies we say that “Persistence is a exception to load-balancing“.

And the demonstration is just above.

Affinity configuration in HAProxy / Aloha load-balancer

The configuration below shows how to do affinity within HAProxy, based on client IP information:

frontend ft_web

bind 0.0.0.0:80

default_backend bk_web

backend bk_web

balance source

hash-type consistent # optional

server s1 192.168.10.11:80 check

server s2 192.168.10.21:80 check

Web application persistence

In order to provide persistence at application layer, we usually use Cookies.

As explained previously, there are two ways to provide persistence using cookies:

- Let the load-balancer set up a cookie for the session.

- Using application cookies, such as ASP.NET_SessionId, JSESSIONID, PHPSESSIONID, or any other chosen name

Session cookie setup by the Load-Balancer

The configuration below shows how to configure HAProxy / Aloha load balancer to inject a cookie in the client browser:

frontend ft_web

bind 0.0.0.0:80

default_backend bk_web

backend bk_web

balance roundrobin

cookie SERVERID insert indirect nocache

server s1 192.168.10.11:80 check cookie s1

server s2 192.168.10.21:80 check cookie s2

Two things to notice:

1/ the line “cookie SERVERID insert indirect nocache”:

This line tells HAProxy to setup a cookie called SERVERID only if the user did not come with such cookie. It is going to append a “Cache-Control: nocache” as well, since this type of traffic is supposed to be personnal and we don’t want any shared cache on the internet to cache it

2/ the statement “cookie XXX” on the server line definition:

It provides the value of the cookie inserted by HAProxy. When the client comes back, then HAProxy knows directly which server to choose for this client.

So what happens?

1/ At the first response, HAProxy will send the client the following header, if the server chosen by the load-balancing algorithm is s1:

Set-Cookie: SERVERID=s1

2/ For the second request, the client will send a request containing the header below:

Cookie: SERVERID=s1

Basically, this kind of configuration is compatible with active/active Aloha load-balancer cluster configuration.

Using application session cookie for persistence

The configuration below shows how to configure HAProxy / Aloha load balancer to use the cookie setup by the application server to maintain affinity between a server and a client:

frontend ft_web

bind 0.0.0.0:80

default_backend bk_web

backend bk_web

balance roundrobin

cookie JSESSIONID prefix nocache

server s1 192.168.10.11:80 check cookie s1

server s2 192.168.10.21:80 check cookie s2

Just replace JSESSIONID by your application cookie. It can be anything, like the default ones from PHP and IIS: PHPSESSID and ASP.NET_SessionId.

So what happens?

1/ At the first response, the server will send the client the following header

[sourcecode language=”text”]Set-Cookie: JSESSIONID=i12KJF23JKJ1EKJ21213KJ[/sourcecode]

2/ when passing through HAProxy, the cookie is modified like this:

Set-Cookie: JSESSIONID=s1~i12KJF23JKJ1EKJ21213KJ

Note that the Set-Cookie header has been prefixed by the server cookie value (“s1” in this case) and a “~” is used as a separator between this information and the cookie value.

3/ For the second request, the client will send a request containing the header below:

Cookie: JSESSIONID=s1~i12KJF23JKJ1EKJ21213KJ

4/ HAProxy will clean it up on the fly to set it up back like the origin:

Cookie: JSESSIONID=i12KJF23JKJ1EKJ21213KJ

Basically, this kind of configuration is compatible with active/active Aloha load-balancer cluster configuration.

What happens when my server goes down???

When doing persistence, if a server goes down, then HAProxy will redispatch the user to an other server.

Since the user will get connected on a new server, then this one may not be aware of the session, so be redirected to the login page.

But this is not a load-balancer problem, this is related to the application server farm.

Source: https://www.haproxy.com/blog/load-balancing-affinity-persistence-sticky-sessions-what-you-need-to-know/